Learning Randomized Reductions and Program Properties

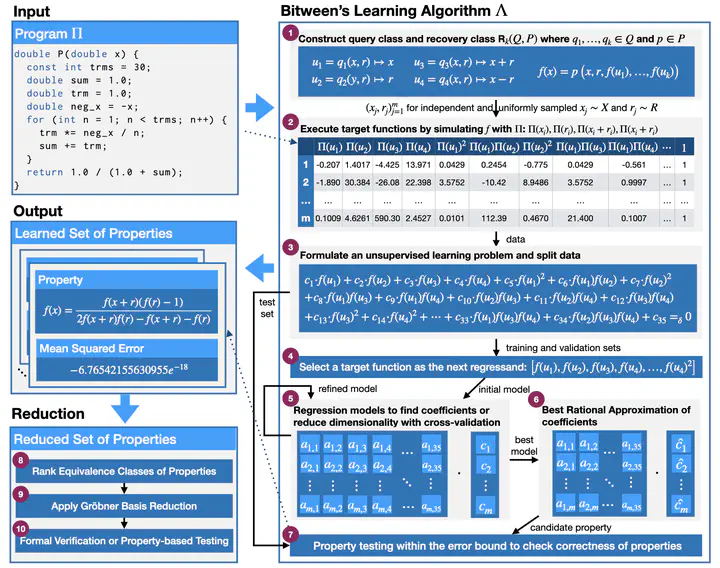

- We introduce Bitween, a linear regression-based automated learning algorithm that effectively discovers complex nonlinear randomized (self)-reductions and other arbitrary program invariants (e.g., loop invariants and post conditions) in mixed integer and floating-point programs.

- We present a rigorous theoretical framework for learning randomized (self)-reductions, advancing formal foundations in this area.

- We create a benchmark suite, RSR-Bench, to evaluate Bitween’s effectiveness in learning randomized reductions. This suite includes a diverse set of mathematical functions commonly used in scientific and machine learning applications. Our evaluation compares Bitween’s linear regression backend, which we call Bitween, against a Mixed-Integer Linear Programming (MILP) backend, which we call Bitween-Milp, showing that linear regression-based learning outperforms MILP in both scalability and stability within this domain.

- We implement Bitween in Python and demonstrate its performance on an extended version of NLA-DigBench, which includes nonlinear loop invariants and post-conditions. Our empirical evaluation shows that Bitween surpasses leading tools DIG and SymInfer in capability, runtime efficiency, and sampling complexity.