Abstract

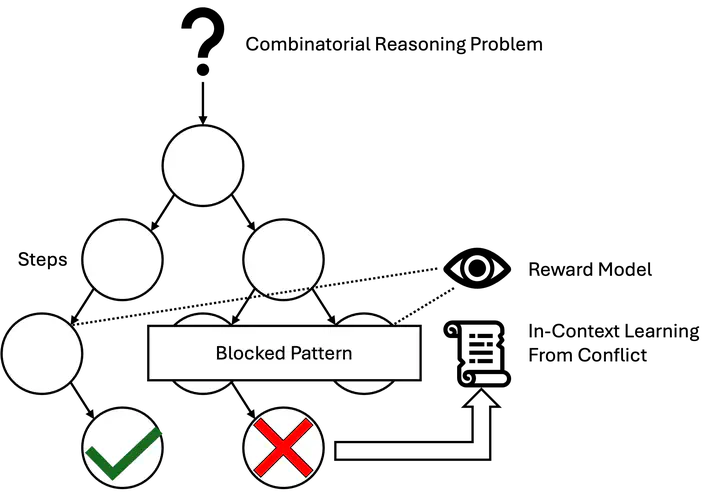

Recent advances in reasoning models have led to impressive performance in several areas of reason: first-order logic, mathematics, and computer programming to name a few. Yet these models do not scale to combinatorial reasoning tasks. These tasks require the model to find a solution out of a combinatorially large search space while reasoning correctly at each step of the search. Existing techniques for such problems are neuro-symbolic: they translate the problem into a formal representation and use symbolic solvers to conquer the combinatorial search space. These approaches are often limited to rigid reasoning tasks that have exact formal translations and even then, translation incurs an error overhead, leading to lower performance. We propose CDCL-IC, a Chain-of-Thought (CoT) reasoning technique that conquers combinatorial search problems through Conflict-Driven Clause Learning~(CDCL). Our technique uses CDCL to learn bad patterns during backtracking CoT reasoning and blocks it using in-context learning to prune the search space. We implement CDCL-IC for Sudoku, and show that our approach greatly outperforms both traditional CoT and o3-mini on 9x9 Sudoku problems.